I recently moved this blog from one old server to a new one. This is my first blog post on this new server. Let’s hope nothing explodes.

For many years I have been a more or less happy customer of Hosteurope. It is a hosting company headquartered in Germany (with hosting sites in other European countries). Many years ago I chose to sign up with Hosteurope for one of their so called “VPS” aka Virtual Private Server. That’s basically a virtual server running on one of their larger server clusters.

For many years I have been a more or less happy customer of Hosteurope. It is a hosting company headquartered in Germany (with hosting sites in other European countries). Many years ago I chose to sign up with Hosteurope for one of their so called “VPS” aka Virtual Private Server. That’s basically a virtual server running on one of their larger server clusters.

About a year ago I realized that my annual bill for that Hosteurope server was about €156 per year. That’s not a huge amount, but as I’ve been involved in cloud-hosting for many of our projects, I knew there are many other options that offer a way better price / value ratio.

Being lazy as humans are, I didn’t want to migrate my server away. In fact, it would have been enough for me if Hosteurope would have lowered my service charge to the price of the currently available equivalent VPS they are selling. Over the years, hardware has gotten cheaper and so has hosting gotten cheaper. In short: Newer VPS products of Hosteurope now cost less and provide more power.

I simply love attractive cost/value ratios.

So last year I reached out to Hosteurope and asked them wether they can offer me my old VPS for the new price (~€4 less per month or so). It’s not that much of a difference, but for the principle of it: I just like to be treated fairly. They did not agree to that and simply said: “You have to stick to your old product, or you may also terminate your contract.” Last year I missed that deadline — again, that wasn’t really a priority for me — and therefore, the contract renewed for another year. This year, though, I remembered and terminated on time.

AWS has almost unlimited capabilities

For over 10 years our businesses are now customers at Amazon Web Services. We’ve been with them from almost their first service. So I’ve been working with AWS for a long long time. We even had an incident where we literally spent $4000 USD in minutes — accidentally. That can happen if you “overdo” extreme automation 🙂

However, when using AWS properly, it can be very useful and cost-efficient. Especially, when we’re talking about hosting my small blog kozen.de as well as some other sites I run on this server.

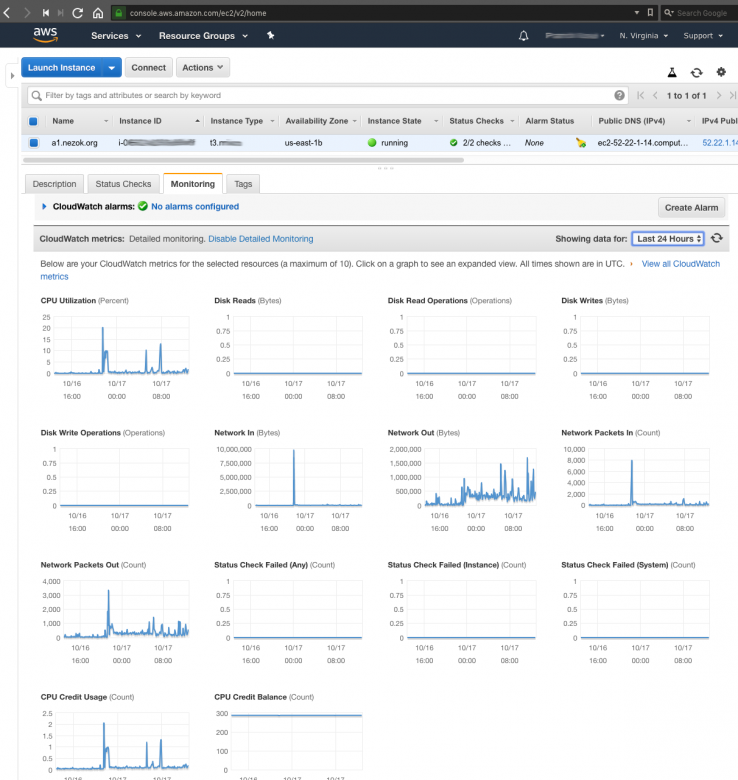

AWS has some great services I was able to use for this move. That includes their S3 Simple Storage, EC2 Elastic Cloud, CloudFront Content Delivery Network, CloudWatch Monitoring Service and many others well.

AWS’s own Amazon Linux 2 is also a great Linux distribution I’ve grown to like and I’m quite confident it keeps getting maintained for the next 10 years. The old Debian that was running on my Hosteurope server wasn’t being maintained by Hosteurope – especially as they had some funky customizations and source settings in there, which for the past years seem to not have gotten the love they deserved. Hence, I ended up with an outdated system.

I’m confident this will be better now. And besides, instead of paying €156 I’m expecting to pay at most €60 per year. That’s a fluffy 60% savings and I haven’t even factored in discounts at Reserved Instance costs.

Let’s see how this goes. For now, I’d be happy if nothing crashes in the next few days after this migration 😂

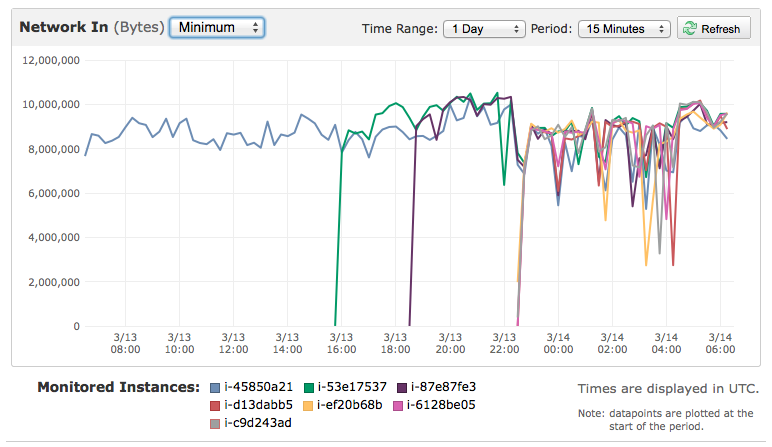

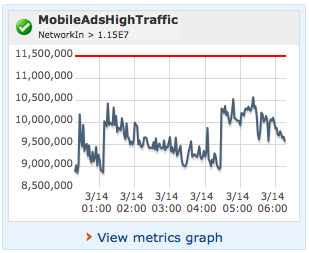

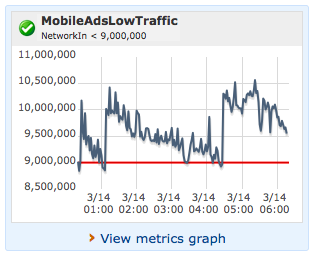

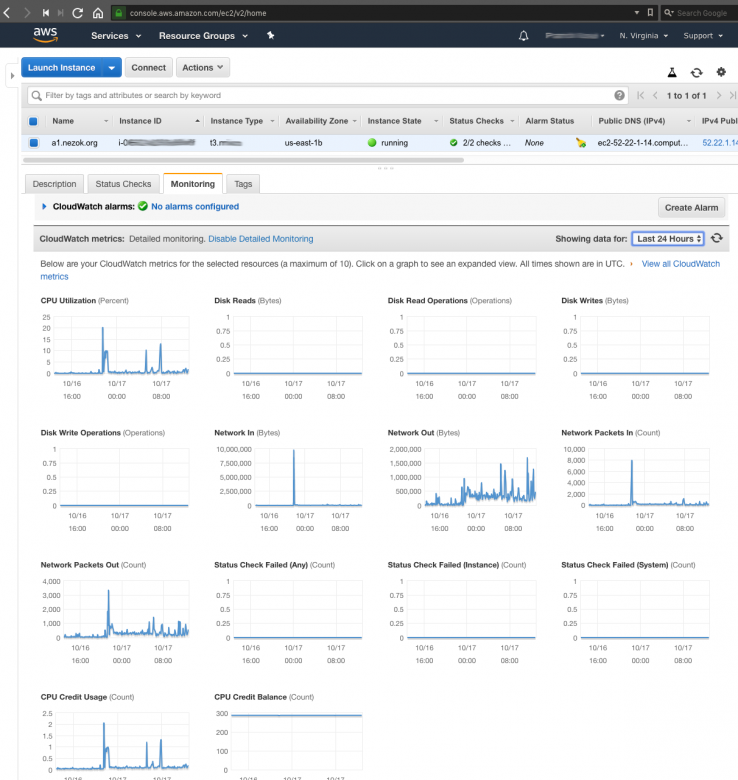

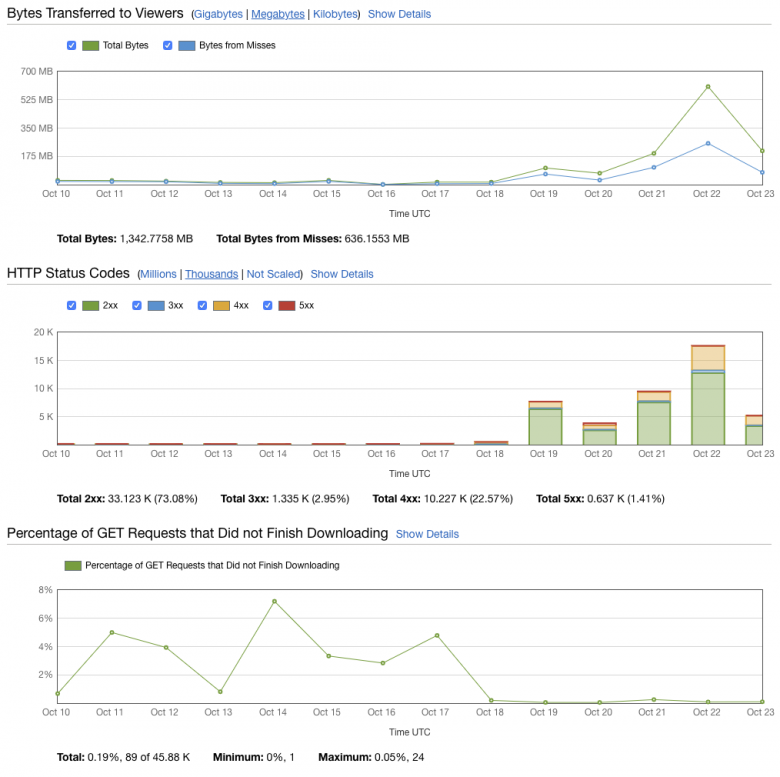

P.S.: Monitoring capabilities are also quite neat. I can monitor the performance at all times. Have a look:

Please follow and like us:

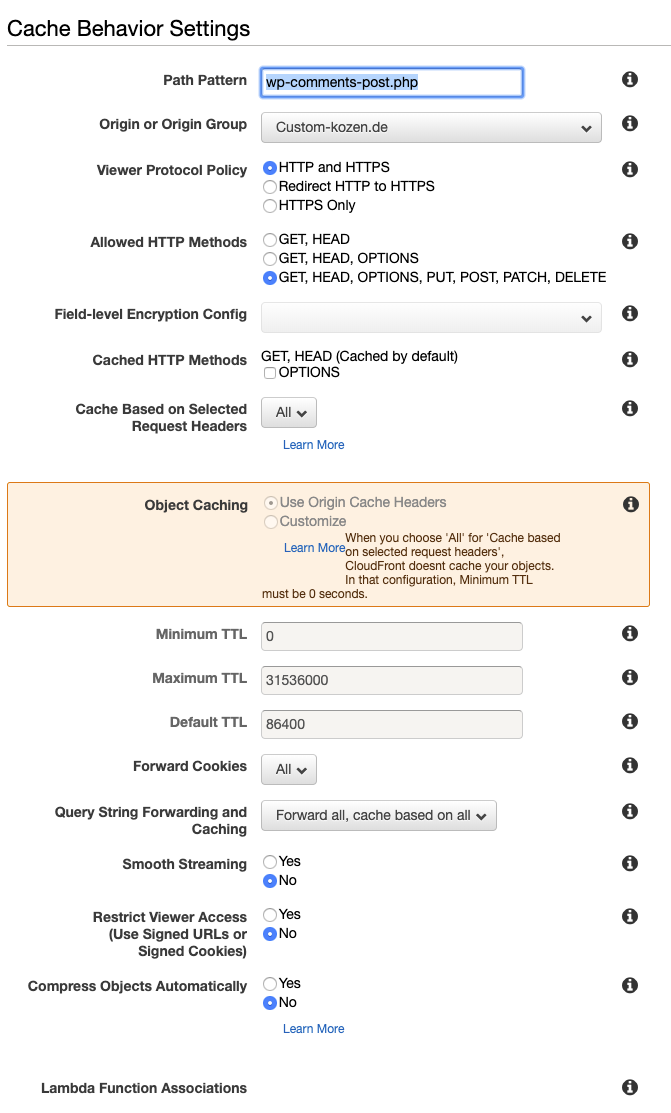

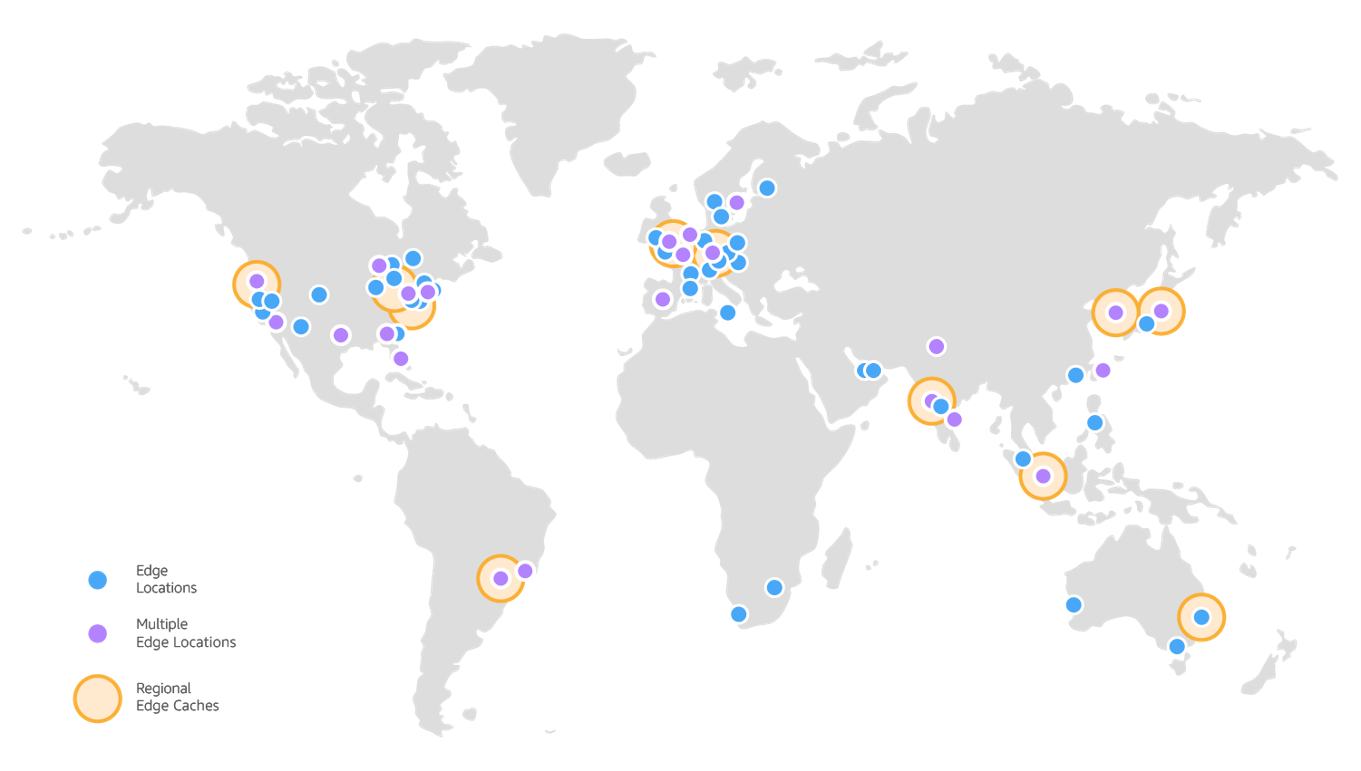

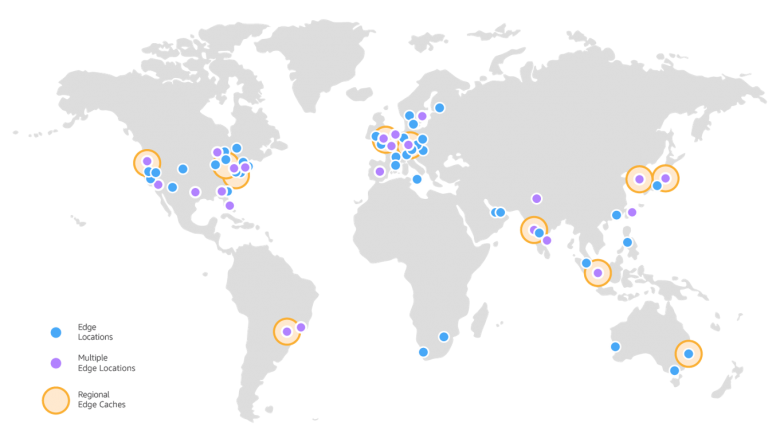

To ensure that load spikes are being handled properly and the server will not go offline during these peak times, a content distribution network (CDN) comes in handy. The great thing about AWS is that they’ve thought of all these scenarios and of course they’ve got a CDN solution ready to deploy. It’s called

To ensure that load spikes are being handled properly and the server will not go offline during these peak times, a content distribution network (CDN) comes in handy. The great thing about AWS is that they’ve thought of all these scenarios and of course they’ve got a CDN solution ready to deploy. It’s called

To run massive load tests I used

To run massive load tests I used  As it is when you’re setting up new things, you’ll have many “new things” you’re working with. In my case, it was the first time I used

As it is when you’re setting up new things, you’ll have many “new things” you’re working with. In my case, it was the first time I used

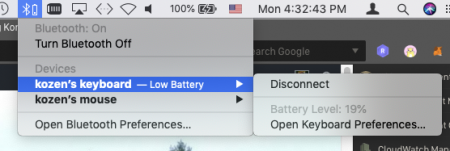

Als Mac Nutzer habe ich natürlich eine Magic Mouse und eine kabellose Tastatur. Beide bekommen ihren Strom durch handelsübliche AA Batterien, obwohl die neuste Version der Magic Mouse ja mittlerweile einen Akku fest verbaut hat. Ich aber habe noch die alte Version und muss so lange noch Batterien nutzen.

Als Mac Nutzer habe ich natürlich eine Magic Mouse und eine kabellose Tastatur. Beide bekommen ihren Strom durch handelsübliche AA Batterien, obwohl die neuste Version der Magic Mouse ja mittlerweile einen Akku fest verbaut hat. Ich aber habe noch die alte Version und muss so lange noch Batterien nutzen.

For many years I have been a more or less happy customer of

For many years I have been a more or less happy customer of